Prediction intervals with missing data

Department of Methodology and Statistics, Utrecht University

Uncertainty quantification

Proper uncertainty quantification is essential in prediction settings

- Expected grade

- Election polling

- Package delivery

Prediction interval

A range of values \([\hat y_l, \hat y_u]\) that cover the true value \(y\) of unseen cases with probability \(1-\alpha\)

Missing data complicates prediction

How to deal with observed predictors?

- Complete case analysis? No.

- Imputation: single (deterministic) or multiple (stochastic)?

- Single imputation: unbiased predictions (Little 1992), but invalid uncertainty quantification (Van Buuren 2018; Zaffran et al. 2024).

- Multiple imputation: valid for inference, but how to use for prediction uncertainty?

Calculating prediction intervals

Prediction uncertainty1

\(U =\) Residual variance + model uncertainty

With missing data

Additional assumption: imputation model is appropriate

Prediction uncertainty with missing data1

Residual variance + model uncertainty + imputation uncertainty2

Evaluation (simulation)

Linear regression model: \(y = X\beta + \varepsilon\)

\(X \sim MVN(0, \Sigma)\)

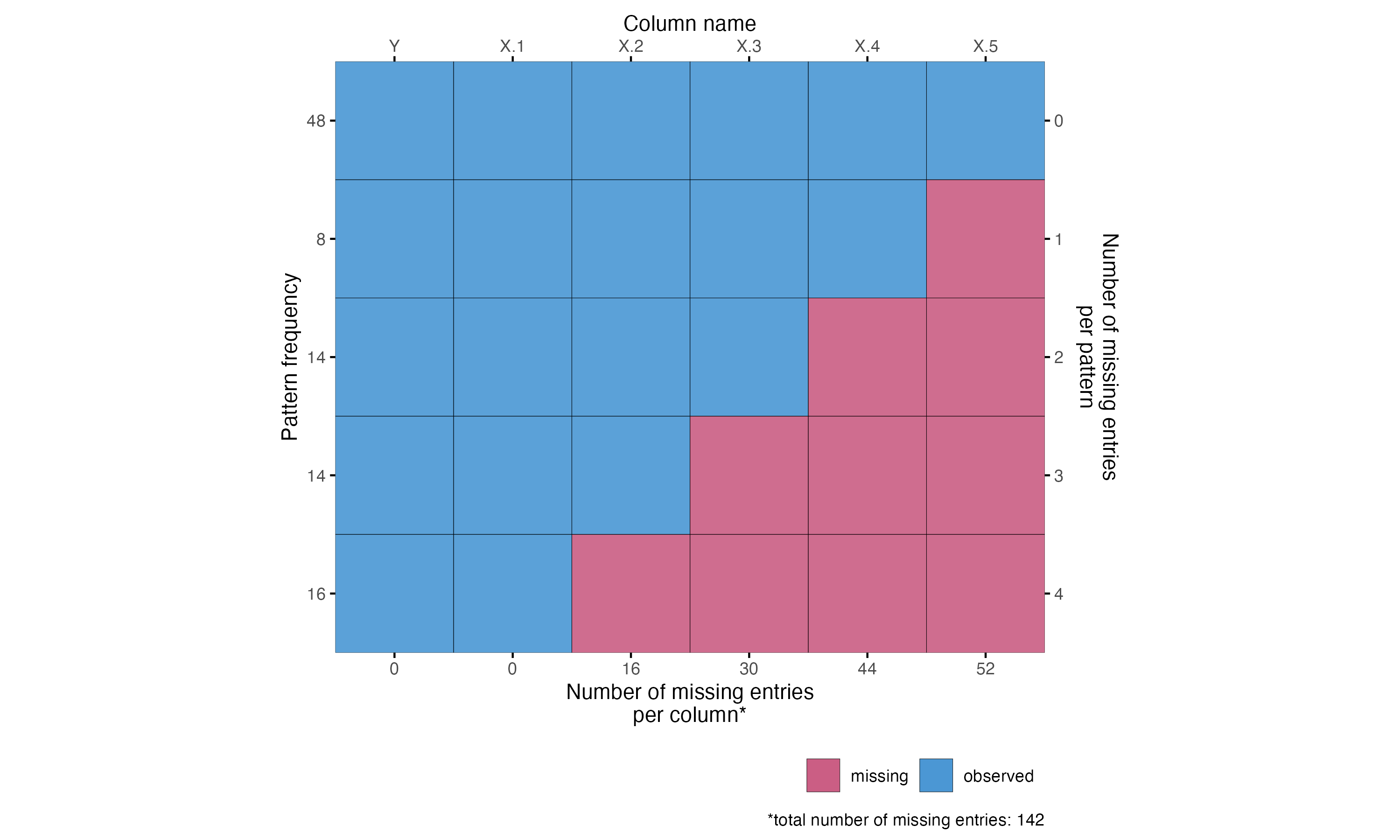

MAR missingness in train, test or both

Varied sample size, correlation

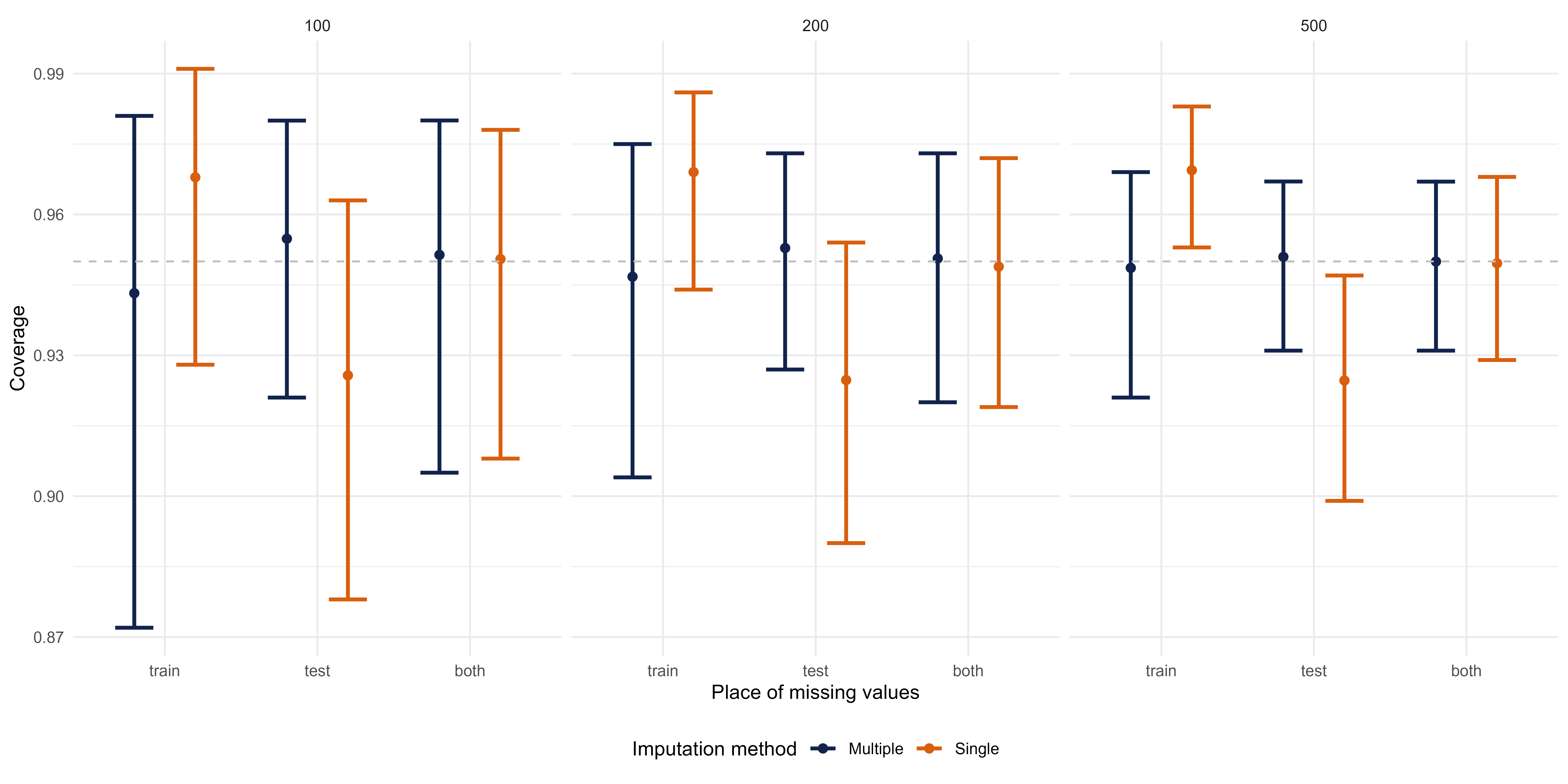

Marginal coverage results

Marginal coverage (y-axis) for multiple and single imputation, depending on whether missingness occurs only in the training data, only in the test data, or in both, for different sizes of the training data.

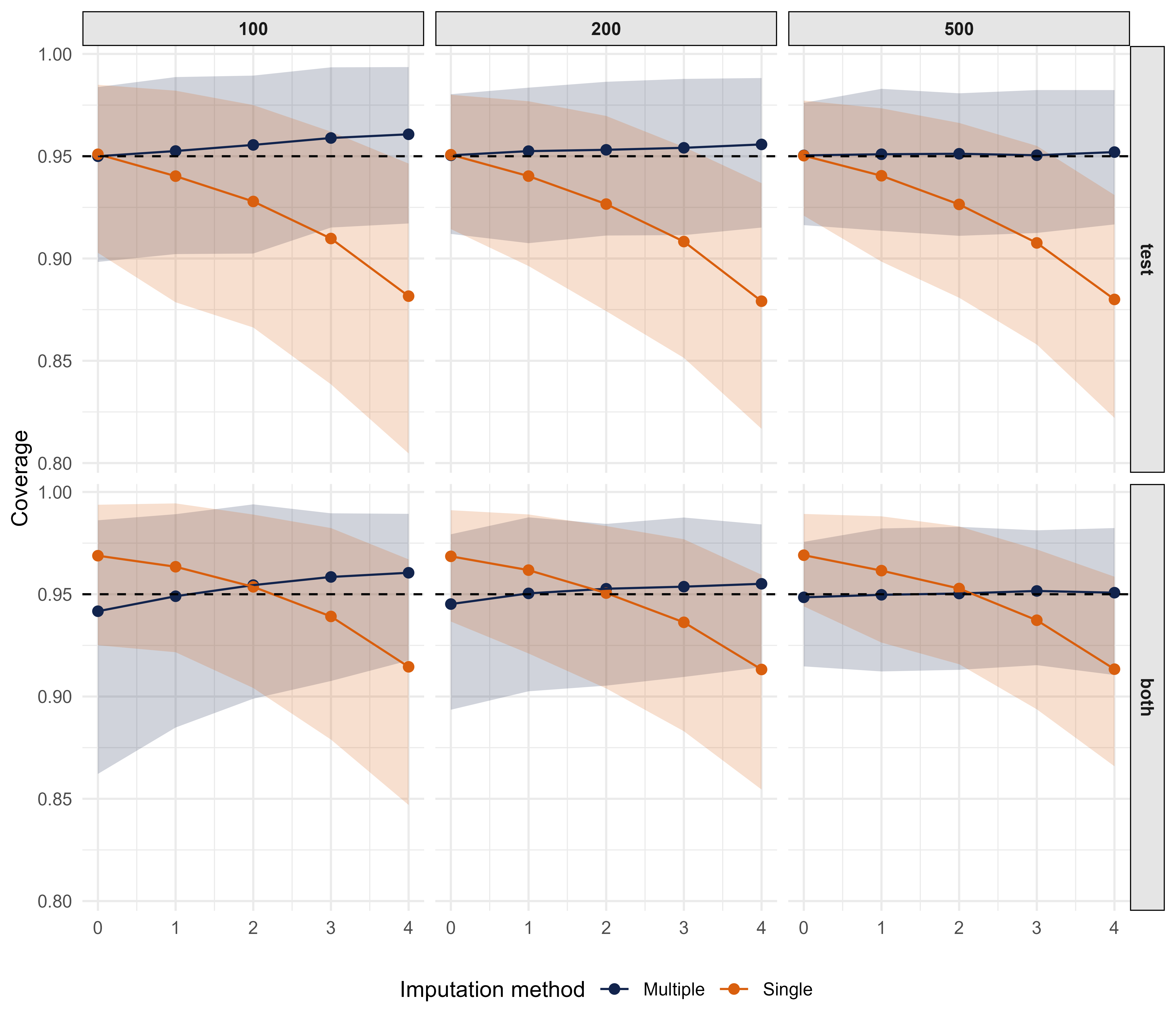

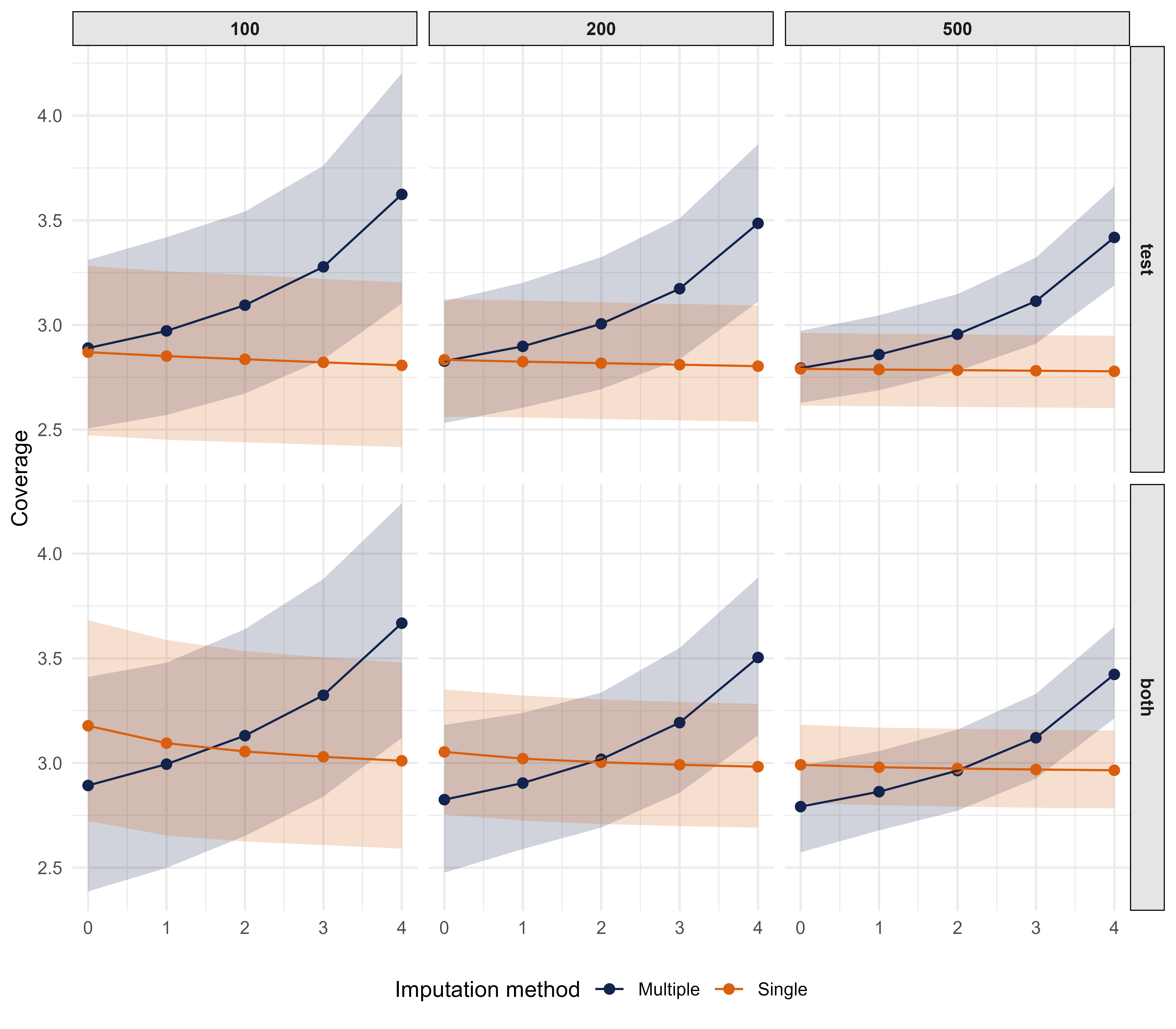

Conditional coverage results

Multiple imputation produces prediction intervals that yield nominal coverage and scale with imputation uncertainty.

The approach is readily available in the

micepackage.Other (conformal) approaches (e.g., Zaffran et al. 2023, 2024) assume MCAR and require very large samples.

More research is needed to test our method beyond linear regression.

References

Prediction interval calculation

Standard linear model prediction variance \[ U = \hat \sigma^2 (1 + x^T (X^TX)^{-1} x) \] and prediction interval \[ [\hat y - t_{\alpha/2, n-p}\sqrt U, \hat y + t_{\alpha/2, n-p}\sqrt U], \] based on a \(t\)-distribution with \(n - p\) degrees of freedom.

Prediction intervals with missing data

With missing data, the prediction variance equals \[ T = \bar U + B(1 + 1/m), \] with \(B = \text{var} [\hat y_j]\) over imputations \(j = 1, \dots, m\). The prediction interval equals \[ [\bar {\hat y}_j - t_{\alpha/2, \nu}\sqrt T, \bar {\hat y}_j + t_{\alpha/2, \nu}\sqrt T], \] where \(\bar {\hat y}_j = \sum_j \hat y_j\) and \(\nu\) denotes the missing data degrees of freedom (Barnard and Rubin 1999).